MicroTorch - Deep Learning from Scratch!

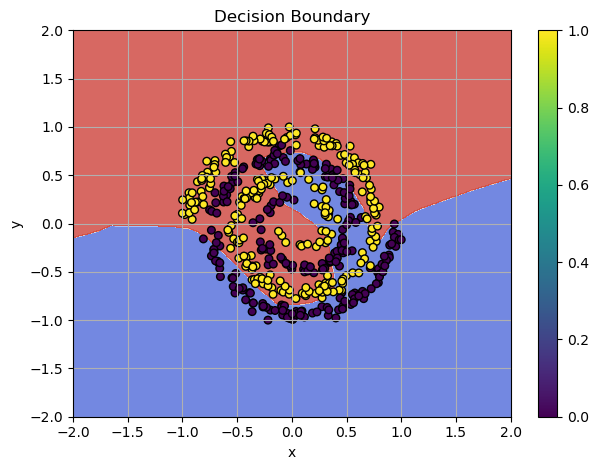

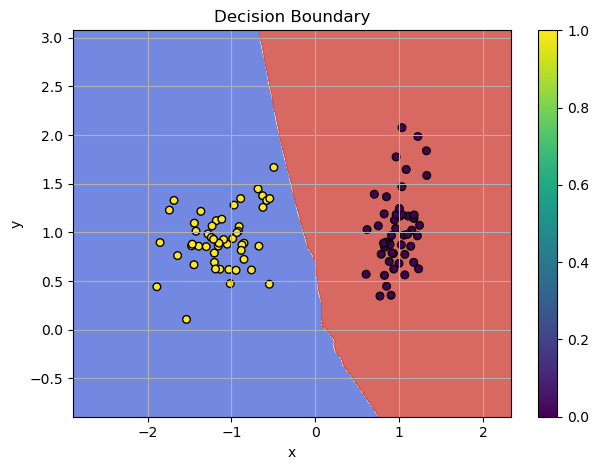

Implementing deep learning algorithms involves managing data flow in two directions: forward and backward. While the forward pass is typically straightforward, handling the backward pass can be more challenging. As discussed in previous posts, implementing backpropagation requires a strong grasp of calculus, and even minor mistakes can lead to significant issues.

Fortunately, modern frameworks like PyTorch simplify this process with autograd, an automatic differentiation system that dynamically computes gradients during training. This eliminates the need for manually deriving and coding gradient calculations, making development more efficient and less error-prone.

Now, let's build the backbone of such an algorithm - Tensor class!

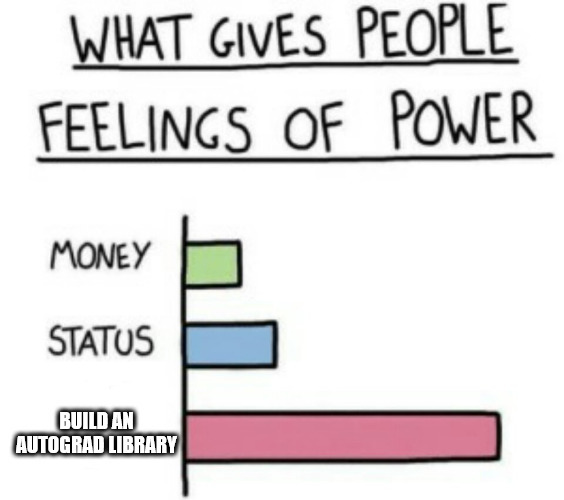

Build an autograd!